So, what is crawl budget?

There are limits to how much time Googlebot can spend crawling any single site. Google explains a site’s crawl budget, as the “amount of time and resources that Google devotes to crawling a site”. It is also defined as the number of pages a crawler sets to crawl in a certain period of time. For each website, crawl budgets will be different and your site’s crawl budget is established automatically by Google.

Note that not everything crawled on your site will necessarily be indexed; each page must be evaluated, consolidated, and assessed to determine whether it will be indexed after it has been crawled.New content is being created and posted online all the time. Working with a professional digital marketing services company will help you to optimize your web pages to allow for their smooth indexing process.

Key Elements Determining Crawl Budget

Two main elements that determine the crawl budget are – crawl capacity limit and crawl demand.

Crawl capacity limit

To ensure smooth crawling of your website by Googlebot without overwhelming your servers, Googlebot calculates a crawl capacity limit. This limit determines the maximum number of simultaneous parallel connections Googlebot can use and the time delay between fetches, ensuring comprehensive coverage of your important content while avoiding server overload. Factors such as crawl health, where faster responses increase the limit and server errors decrease it, and Google’s overall crawling limits influence this capacity, ensuring efficient resource allocation despite Google’s vast infrastructure.

Crawl demand

Google’s crawl demand is influenced by various factors such as the size of the site, update frequency, page quality, and relevance compared to others. Key determinants include perceived inventory, where Googlebot aims to crawl all known URLs unless instructed otherwise, potentially wasting crawl time if many are duplicates or irrelevant. Popularity and staleness also affect crawl frequency, with more popular URLs being crawled more often to maintain freshness. Site-wide events like site moves can trigger increased crawl demand to re-index content under new URLs. Controlling perceived inventory is crucial for optimizing crawl efficiency.

Taking crawl capacity and crawl demand together, Google defines a site’s crawl budget as the set of URLs that Googlebot can and wants to crawl. Even if the crawl capacity limit isn’t reached, if crawl demand is low, Googlebot will crawl your site less.

Crawl Budget and SEO

When Google is faced with a nearly-infinite quantity of content, Googlebot will be able to find and crawl only a percentage of that content. From that, Google will be able to index only a specific portion. URLs are like bridges between a website and a search engine’s crawler, and Google should be able to find and crawl your URLs to get the content you post. Crawl budget is the average number of URLs search engine bots will crawl on your site before leaving. So, it is very important to optimize your site for crawl budget to make sure that search engine bots aren’t wasting their time by crawling through the unimportant pages and ignoring your important pages.

Search engines have three primary functions:

- Crawl: Crawling through the content for each URL they find

- Index: After crawling through the content found, they store and organize it. Once a page is in the index, it is in the running to be displayed to relevant queries

- Rank: It will provide the best pieces of content that matches a searcher’s query which means that results are ordered by most relevant to least relevant

If your URL is complicated, crawlers will be wasting time tracing and retracing their steps. If your URL is organized and leads directly to your distinct content, then Googlebot will access your content easily instead of crawling through unimportant pages or empty pages or crawling through the same content over and over again through different URLs.

Very large sites that contain tens of thousands of pages or those that auto-generate URL parameters-based pages, prioritizing what to crawl, when, and how much resource the server hosting the site can allocate to crawling is very important. It is not a bad idea to block crawlers from crawling your content that definitely you don’t care about.

A site that contains fewer than a thousand URLs will be crawled efficiently most of the time. Make sure that you don’t block the crawler’s access to pages to which you have added other directives such as canonical or no-index tags. If a search engine bot is blocked from a page, it cannot see the instructions on that page.

According to Google’s analysis, too low-value-add URLs can negatively affect a site’s crawling and indexing. Below are the categories (in order of significance) that low-value-add URLs fall into:

- Faceted navigation and session identifiers

- On-site duplicate content

- Soft error pages

- Hacked pages

- Infinite spaces and proxies

- Low quality and spam content

Crawl budget matters for better SEO. Since you want Google to discover as many important pages of your site as possible within a given period of time, optimizing crawl budget by eliminating certain factors that affect it is very important. If you don’t optimize crawl budget, the pages on the site will not be indexed, and if the pages are not indexed, they are not going to rank.

To know your crawl budget, log in to your Google Search console account, go to Crawl and then Crawl Stats. You can determine the average number of pages on your site that are crawled each day. The number is likely to change, but doing this will give you a solid idea about how many of your site’s pages you can expect to be crawled within a given period of time.

Tips to Optimize Crawl Budget for SEO

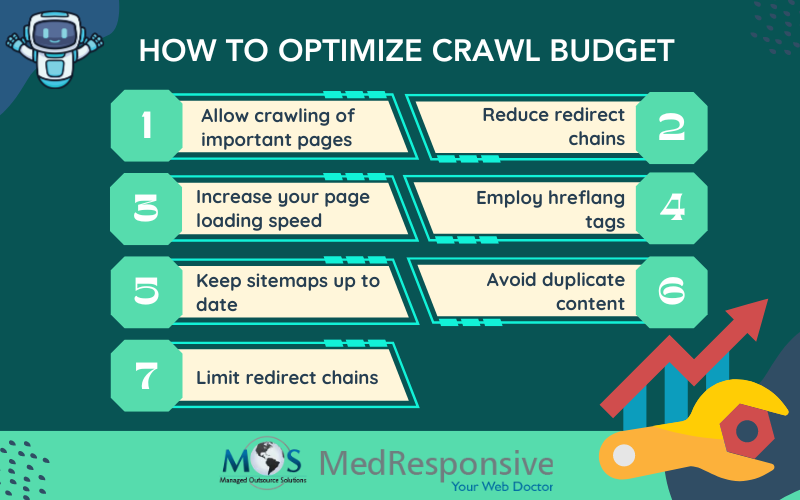

Follow these steps to optimize crawl budget for better SEO:

- Ensure crawling of important pages and block the content that does not deliver any value: This is a very important step; you can simply add your robots.txt to website auditor tools of your choice. This will let you to allow or block crawling of any page of your domain in seconds. Next, simply upload an edited document – and it’s done. Robots.txt can be managed by hand, but for a very large website where frequent calibrations might be needed, using a tool is much easier.

- Avoid duplicate content: Eliminate duplicate content to focus crawling on unique content rather than unique URLs.To avoid duplicate content, ensure that each page on your site has unique and valuable content that provides users with distinct information or experiences. Implement canonical tags to indicate the preferred version of similar or identical pages, guiding search engines to index the correct URL and consolidate ranking signals. Use 301 redirects to redirect users and search engines from duplicate pages to the canonical URL, consolidating link equity and preventing duplicate content issues. Additionally, utilize URL parameters effectively to prevent search engines from indexing multiple variations of the same content. Regularly monitor your site’s content for duplication using tools like Google Search Console and address any instances promptly to maintain search engine credibility and enhance user experience. By proactively managing duplicate content, website owners can improve their site’s visibility, credibility, and search engine rankings.

- Keep your sitemaps up to date: Keeping your sitemaps up to date is crucial for ensuring that search engines can efficiently crawl and index your website’s content. Regularly updating your sitemaps allows search engine bots to discover new pages, changes to existing content, and removed URLs, ensuring that your site’s latest information is accurately reflected in search results. By maintaining up-to-date sitemaps, you provide search engines with clear guidance on which pages to prioritize for indexing, helping to improve your site’s overall visibility and search engine rankings. Additionally, regularly submitting updated sitemaps through Google Search Console or other search engine webmaster tools ensures that search engines are promptly notified of any changes to your site’s structure or content, facilitating quicker indexing and better search performance.

- Increase your page loading speed: Improving your page loading speed plays a key role in optimizing crawl budget allocation for your website. Faster loading times enable search engine crawlers to efficiently navigate through your site’s content, as they can crawl more pages within the allocated time frame. When pages load quickly, crawlers spend less time waiting for content to render, and crawl more pages during each visit. Faster-loading pages also enhance user experience, reducing bounce rates and increasing engagement, which can indirectly improve search engine rankings.

- Use HTML whenever possible: Google’s crawler is better at crawling JavaScript to be specific as well as in crawling and indexing Flash and XML. But if you consider other search engines, they aren’t quite there yet. So, whenever possible, stick to HTML. This will not hurt your chances with any crawler.

- Avoid redirect chains: This is a common sense approach for website health. If there is an unreasonable number of 301 and 302 redirects in a row, it will definitely hurt your crawl limit. The crawling will be stopped at a certain point without getting to the page you need indexed. Including one or two redirects here and there will not cause much damage. Include them only when it is absolutely necessary.

- Take care of your URL parameters: Always remember that separate URLs will be treated by crawlers as separate pages, wasting invaluable crawl budget. So, let Google know about these URL parameters to save your crawl budget and avoid raising concerns about duplicate content as well. To let Google know about this, add them to your Google Search Console account.

- Hreflang tags are vital: Crawlers employ hreflang tags to analyze your localized pages. The hreflang attribute is used by Google to serve the correct regional or language URLs in the search results based on the searcher’s country and language preferences. You should let Google know about the localized versions of your pages as accurately as possible.

Firstly, use the “lang_code” in your page header. The “lang_code” is a code for supported language. Also, use the element for any URL given. Like that, you can point to the localized versions of a page.

- Find HTTP errors and don’t let them hurt your crawl budget: Technically, 404 and 410 pages hurt your crawl budget and thereby, user experience. Any URL including CSS and JavaScript that Google fetches, consumes one unit of your crawl budget. Do not waste it on 404 or 503 pages. Take time to find broken links or server errors on your site and fix them. SE Ranking and Screaming Frog are great tools for SEO website audit.

Crawl Budget is clearly important for SEO. If the number of pages exceeds the crawl budget, then you will have pages on your site that are not indexed. If Google does not index a page, it is not going to rank for anything. Experts providing affordable digital marketing services can help you optimize crawl budget for SEO.

Trust our proven digital marketing services for success!